Unlocking Insights with A/B Testing: A Comprehensive Guide

Mapping the User Journey: Understanding the Path to a Seamless User Experience

May 23, 2024

Exploring the Power of Color: A Comprehensive Guide to Color Theory

May 23, 2024Unlocking Insights with A/B Testing: A Comprehensive Guide

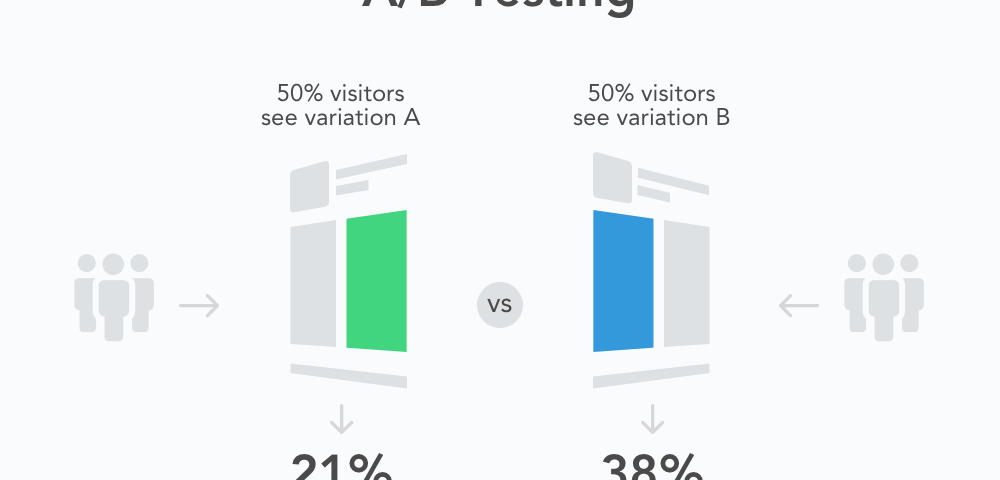

A/B testing, also known as split testing, is a powerful technique used in digital marketing and user experience design to compare two versions of a webpage, app, or marketing campaign to determine which one performs better. By systematically testing variations and analyzing user behavior, organizations can make data-driven decisions to optimize their strategies and improve performance. In this blog, we will explore the concept of A/B testing, its benefits, best practices, and practical tips for implementation.

Thank you for reading this post, don't forget to subscribe!What is A/B Testing?

Definition of A/B Testing

A/B testing is a controlled experiment where two or more variants of a webpage, app, or marketing campaign are tested against each other to determine which one produces better results. The variants, typically referred to as A and B, are randomly presented to users, and their behavior is tracked and analyzed to identify the most effective option.

Key Components of A/B Testing

Control Group

The control group is the baseline or original version of the element being tested. It serves as a reference point for comparison against the variant or variations being tested.

Variants

Variants are the different versions of the element being tested. They typically contain one or more changes or variations from the control group, such as different headlines, images, or call-to-action buttons.

Randomization

Randomization ensures that users are randomly assigned to either the control group or one of the variants. This helps eliminate bias and ensures that the results of the test are statistically valid.

Benefits of A/B Testing

Data-Driven Decision Making

A/B testing provides objective data on the performance of different variations, allowing organizations to make informed decisions based on empirical evidence rather than assumptions or intuition.

Continuous Improvement

By systematically testing variations and analyzing results, organizations can continuously iterate and improve their strategies, leading to incremental gains in performance over time.

Optimized User Experience

A/B testing helps identify elements of a webpage, app, or marketing campaign that resonate most with users, resulting in a more optimized and user-friendly experience.

Increased Conversions and Revenue

By identifying and implementing changes that lead to improved performance, organizations can increase conversions, such as sign-ups, purchases, or clicks, resulting in higher revenue and return on investment.

Best Practices for A/B Testing

Define Clear Objectives

Start by clearly defining the objectives of the A/B test, such as increasing conversions, improving engagement, or optimizing user experience. Clear objectives help guide the design of the test and ensure meaningful results.

Test One Variable at a Time

To accurately assess the impact of changes, test one variable at a time. Testing multiple variables simultaneously can make it difficult to determine which change had the most significant effect on performance.

Use Statistical Significance

Ensure that the sample size for the A/B test is large enough to achieve statistical significance. Statistical significance helps determine whether any observed differences in performance are due to chance or are statistically significant.

Monitor Key Metrics

Monitor key metrics throughout the duration of the A/B test to track performance and identify any unexpected results or anomalies. Key metrics may include conversion rates, click-through rates, bounce rates, and revenue.

Allow Sufficient Time for Testing

Allow sufficient time for the A/B test to run to ensure that results are statistically valid. Testing periods will vary depending on factors such as traffic volume, but a minimum duration of one to two weeks is typically recommended.

Practical Tips for A/B Testing

Start with High-Impact Changes

Begin A/B testing with high-impact changes that are likely to have a significant effect on performance. Focus on elements such as headlines, calls-to-action, and page layouts that have a direct impact on user behavior.

Iterate Based on Results

Based on the results of the A/B test, iterate and make adjustments to further optimize performance. Implement successful changes across other pages or campaigns to maximize impact.

Document and Share Learnings

Document the results of A/B tests and share learnings with relevant stakeholders across the organization. Insights gained from A/B testing can inform future strategies and initiatives.

Consider User Segmentation

Consider segmenting users based on demographics, behavior, or other characteristics to better understand how different user groups respond to variations. This can help tailor experiences to specific audience segments.

Conclusion

A/B testing is a valuable technique for optimizing digital experiences and marketing strategies by systematically testing variations and analyzing user behavior. By following best practices and practical tips for A/B testing, organizations can make data-driven decisions, continuously improve performance, and ultimately enhance the user experience. Embrace A/B testing as a powerful tool in your optimization toolkit to unlock insights, drive conversions, and achieve your business objectives.

For more information: www.ecbinternational.com